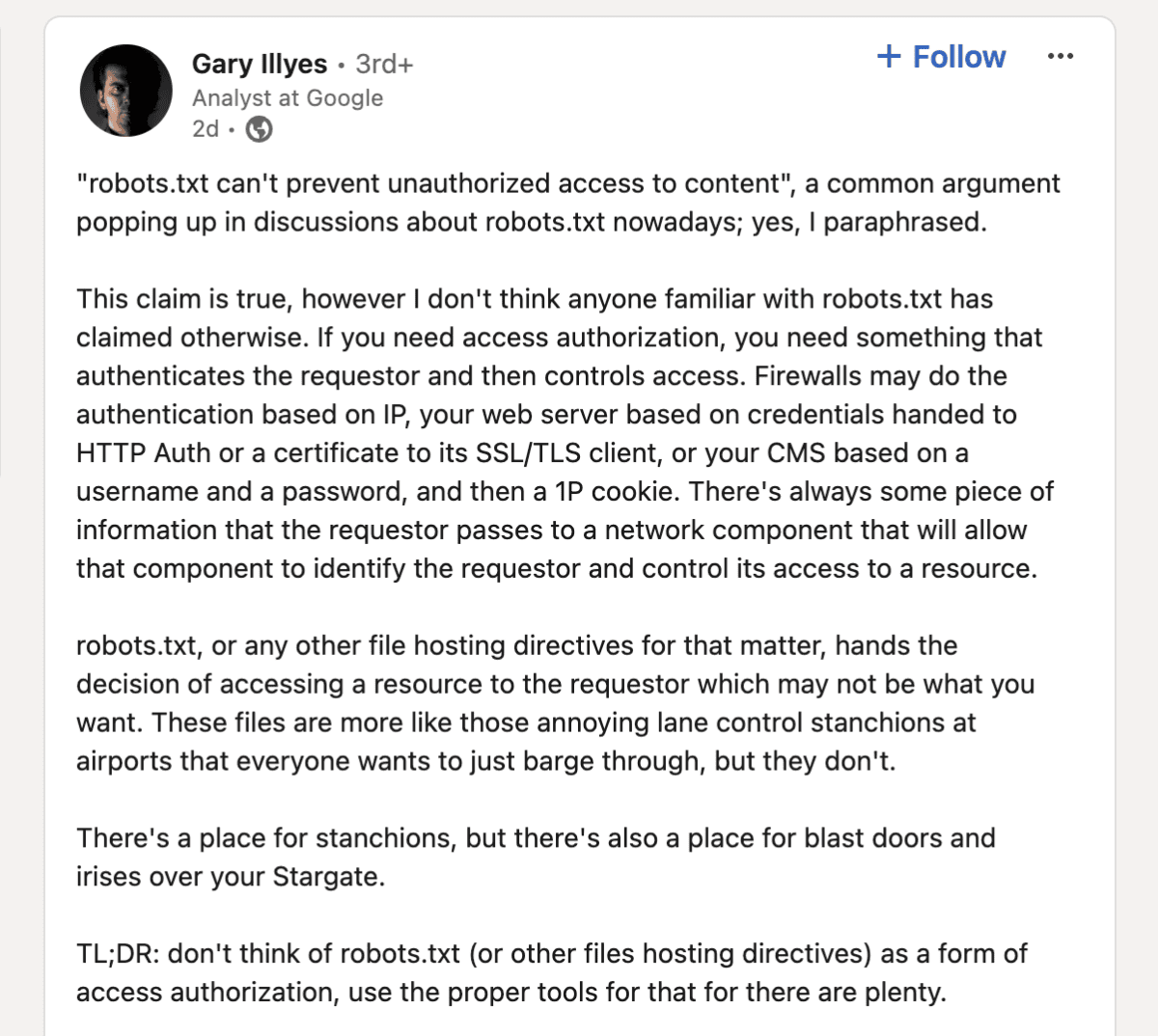

Google’s Gary Illyes recently sparked a lively discussion about robots.txt, shedding light on common misconceptions and offering crucial advice for website owners. Let’s dive into what this means for your site’s security and SEO.

The Truth About Robots.txt and Website Access

Gary Illyes, a seasoned analyst at Google, tackled a hot topic in the SEO world: the limitations of robots.txt. He confirmed what many experts have been saying – robots.txt isn’t a foolproof method for blocking unauthorized access to your website’s content.

Key takeaway: Robots.txt is more like a “please don’t enter” sign than a locked door.

What Robots.txt Can and Can’t Do

Robots.txt is a simple text file that gives instructions to web crawlers. It’s like leaving a note for visitors, but it relies on their goodwill to follow the rules.

- Can do:

- Ask well-behaved crawlers not to access certain pages

- Help manage your site’s crawl budget

- Guide search engines on what to index

- Can’t do:

- Physically prevent access to any part of your site

- Stop malicious bots or hackers

- Replace proper security measures

The Real Deal on Website Security

Illyes emphasizes that for true access control, you need tools that actively authenticate and manage who can view your content. Let’s break down some options:

- Firewalls: These act like bouncers, checking IPs and deciding who gets in.

- Web Server Authentication: Uses things like HTTP Auth or SSL/TLS certificates to verify visitors.

- Content Management Systems (CMS): Require usernames, passwords, and cookies to grant access.

Remember: The key is having a system that makes decisions about access, rather than relying on the crawler to follow instructions.

Robots.txt: More Like Airport Stanchions Than Blast Doors

Illyes uses a clever analogy to explain robots.txt:

“These files are more like those annoying lane control stanchions at airports that everyone wants to just barge through, but they don’t.”

Think of it this way:

- Robots.txt = Airport stanchions

- They guide polite visitors

- Easy to ignore if someone really wants to

- Proper security = Blast doors

- Actually prevent unauthorized entry

- Necessary for sensitive areas

Real-World Consequences: Bing’s Fabrice Canel Weighs In

Microsoft Bing’s Fabrice Canel added an important perspective to the discussion. He pointed out a dangerous trend:

“We and other search engines frequently encounter issues with websites that directly expose private content and attempt to conceal the security problem using robots.txt.”

This highlights a critical misunderstanding among some website owners. They mistakenly believe that using robots.txt to hide sensitive URLs is enough to keep that information safe. In reality, this approach can backfire:

- It doesn’t actually prevent access

- It might draw attention to those “hidden” areas

- Hackers can easily bypass robots.txt instructions

Choosing the Right Tools for the Job

So, if robots.txt isn’t the answer for securing sensitive content, what should you use? Here are some better options:

1. Web Application Firewalls (WAF)

- What they do: Analyze incoming traffic and block suspicious requests

- Benefits:

- Can stop malicious bots and hackers

- Often offer DDoS protection

- Some can adapt to new threats

Example: Cloudflare WAF is popular for its ease of use and powerful features.

2. Password Protection

- What it does: Requires users to log in before accessing content

- Benefits:

- Simple to implement

- Familiar to users

- Can be combined with other security measures

Tip: Use strong, unique passwords and consider two-factor authentication for extra security.

3. IP-based Access Control

- What it does: Restricts access based on the visitor’s IP address

- Benefits:

- Good for internal content or geographically restricted material

- Can block entire ranges of suspicious IPs

Caution: IP addresses can be spoofed, so don’t rely on this method alone for highly sensitive data.

4. SSL/TLS Certificates

- What they do: Encrypt data transmitted between the user and your server

- Benefits:

- Protects against eavesdropping

- Builds trust with visitors

- Often required for e-commerce sites

Pro tip: Always keep your SSL certificates up to date!

Implementing Proper Access Controls: A Step-by-Step Guide

- Assess your needs:

- Identify what content needs protection

- Determine the level of security required

- Choose the right tools:

- Consider a mix of methods for layered security

- Balance security with user experience

- Configure your chosen solutions:

- Follow best practices for each tool

- Test thoroughly before going live

- Monitor and maintain:

- Regularly review access logs

- Keep security measures updated

- Educate your team:

- Ensure everyone understands the importance of proper security

- Train staff on using access control tools correctly

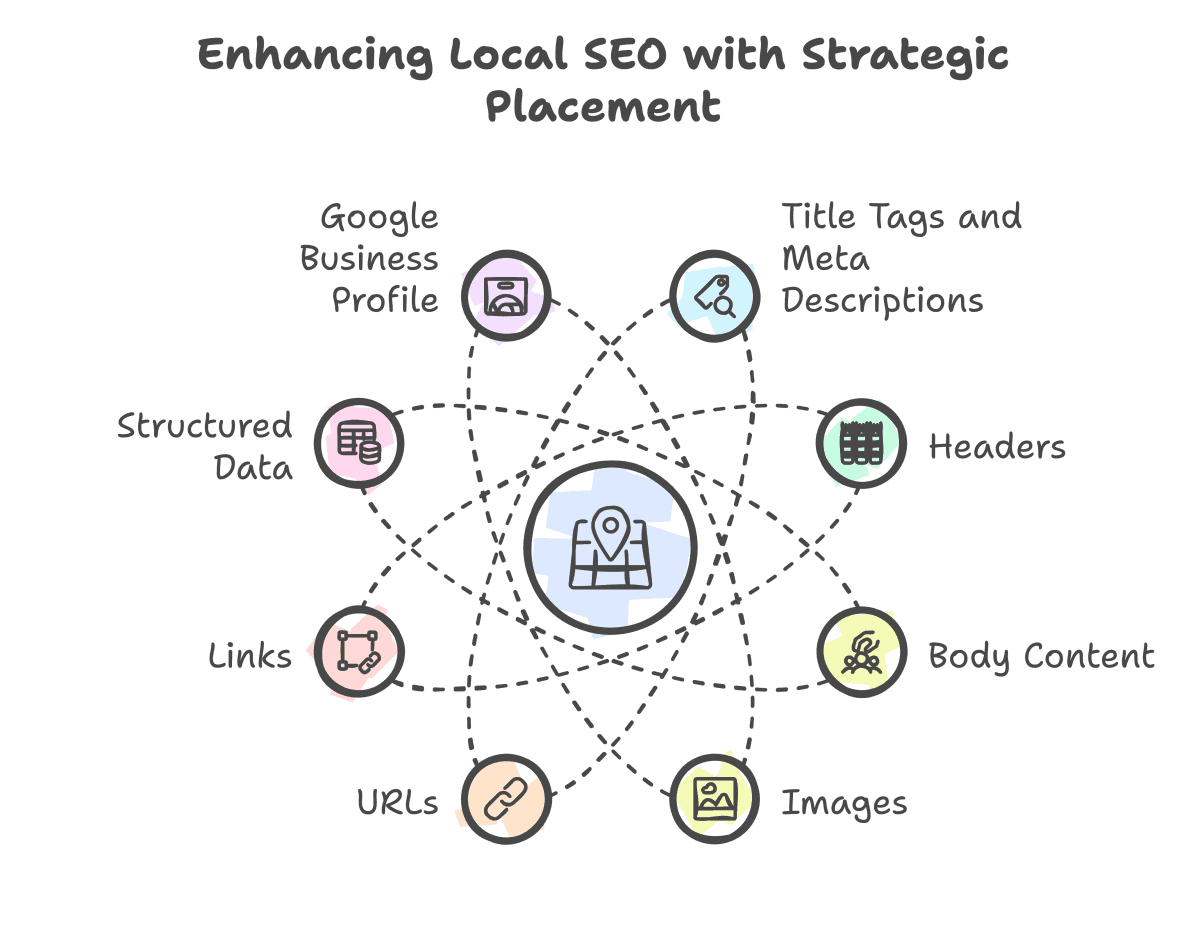

The Role of Robots.txt in Your Overall Strategy

While robots.txt isn’t a security tool, it still has its place in your website management:

- Use it for:

- Managing how search engines crawl your site

- Preventing indexing of non-sensitive but unnecessary pages

- Optimizing your crawl budget

- Don’t use it for:

- Hiding sensitive information

- Blocking malicious bots

- Replacing proper access controls

Key Takeaways: What Every Website Owner Should Remember

- Robots.txt is not a security measure. It’s a polite request, not a locked door.

- Use proper access controls for sensitive content (firewalls, authentication, etc.).

- Don’t rely on “security through obscurity.” Hiding URLs in robots.txt won’t protect them.

- Layer your security measures for the best protection.

- Regularly audit and update your security practices to stay ahead of new threats.

Moving Forward: Best Practices for Website Security and SEO

Balancing security with SEO can be tricky, but it’s essential for a healthy website. Here are some tips:

- Protect sensitive content with proper access controls, not robots.txt

- Use robots.txt strategically to guide search engines and manage crawl budget

- Implement HTTPS across your entire site for better security and SEO benefits

- Regularly audit your site for potential vulnerabilities

- Stay informed about the latest security best practices and SEO guidelines

By following these guidelines, you’ll create a website that’s both secure and search engine friendly. Remember, good security practices often align with good SEO practices – they both aim to create a better experience for your legitimate users.

Conclusion: Security and SEO Go Hand in Hand

Gary Illyes’ comments serve as a vital reminder: don’t confuse robots.txt with real security measures. While robots.txt plays an important role in managing how search engines interact with your site, it’s not a substitute for proper access controls.

By understanding the true purpose of robots.txt and implementing robust security measures, you’ll protect your sensitive content while still maintaining a strong SEO presence. It’s not about choosing between security and visibility – with the right approach, you can have both.

Take action today: Review your website’s security measures and make sure you’re using the right tools for the job. Your users (and search engines) will thank you!